By Edmund Chattoe-Brown

Introduction

The Journal of Artificial Societies and Social Simulation (hereafter JASSS) retains a distinctive position amongst journals publishing articles on social simulation and Agent-Based Modelling. Many journals have published a few Agent-Based Models, some have published quite a few but it is hard to name any other journal that predominantly does this and has consistently done so over two decades. Using Web of Science on 25.07.22, there are 5540 hits including the search term <“agent-based model”> anywhere in their text. JASSS does indeed have the most of any single journal with 268 hits (5% of the total to the nearest integer). The basic search returns about 200 distinct journals and about half of these have 10 hits or less. Since this search is arranged by hit count, this means that the unlisted journals have even fewer hits than those listed i. e. less than 7 per journal. This supports the claim that the great majority of journals have very limited engagement with Agent-Based Modelling. Note that the point here is to evidence tendencies effectively and not to claim that this specific search term tells us the precise relative frequency of articles on the subject of Agent-Based Modelling in different journals.

This being so, it seems reasonable – and desirable for other practical reasons like being entirely open access, online and readily searchable – to use JASSS as a sample – though clearly not necessarily a representative sample – of what may be happening in Agent-Based Modelling more generally. This is the case study approach (Yin 2009) where smaller samples may be practically unavoidable to discuss richer or more complex phenomena like the actual structures of arguments rather than something quantitative like, say, the number of sources cited by each article.

This piece is motivated by the scepticism that some reviewers have displayed about such a case study approach focused on JASSS and conclusions drawn from it. It is actually quite strange to have the editors and reviewers of a journal argue against its ability to tell us anything useful about wider Agent-Based Modelling research even as a starting point (particularly since this approach has been used in articles previously published in the journal, see for example, Meyer et al. 2009 and Hauke et al. 2017). Of course, it is a given that different journals have unique editorial policies, distinct reviewer pools and so on. Though this may mean, for example, that journals only irregularly publishing Agent-Based Models are actually less typical because it is more arbitrary who reviews for them and there may therefore be less reviewing skill and consensus about the value of articles involved. Anecdotally, I have found this to be true in medical journals where excellent articles rub shoulders with much more problematic ones in a small overall pool. The point of my argument is not to claim that JASSS can really stand in for ABM research as a whole – which it plainly cannot – but that, if the case study approach is to be accepted at all, JASSS is one of the few journals that successfully qualifies for it on empirically justifiable grounds. Conversely, given the potentially distinctive character of journals and the wide spread of Agent-Based Modelling, attempts at representative sampling may be very challenging in resource terms.

Method and Results

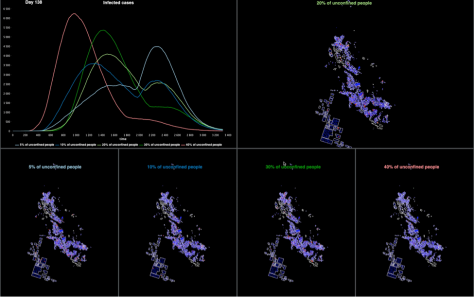

Again, using Web of Science on 04.07.22, I searched for the most highly cited articles containing the string “opinion dynamics”. I am well aware that this will not capture all articles that actually have opinion dynamics as their subject matter but this is not the intention. The intention is to describe a reproducible and measurable procedure correlated with the importance of articles so my results can be checked, criticised and extended. Comparing results based on other search terms would be part of that process. Then I took the first ten distinct journals that could be identified from this set of articles in order of citation count. The idea here was to see what journals had published the most important articles in the field overall – at least as identified by this particular search term – and then follow up their coverage of opinion dynamics generally. In addition, for each journal, I accessed the top 50 most cited articles and then checked how many articles containing the string “opinion dynamics” featured in that top 50. The idea here was to assess the extent to which opinion dynamics articles were important to the impact of a particular journal. Table 1 shows the results of this analysis.

| Journal Title | “opinion dynamics” Articles in the Top 50 Most Cited | Most Highly Cited “opinion dynamics” Article Citations | Number of Articles Containing the String “opinion dynamics” |

| Reviews of Modern Physics | 0 | 2380 | 1 |

| JASSS | 6 | 1616 | 64 |

| International Journal of Modern Physics C | 4 | 376 | 72 |

| Dynamic Games and Applications | 1 | 338 | 5 |

| Physical Review Letters | 0 | 325 | 5 |

| Global Challenges | 1 | 272 | 1 |

| IEEE Transactions on Automatic Control | 0 | 269 | 38 |

| SIAM Review | 0 | 258 | 2 |

| Central European Journal of Operations Research | 1 | 241 | 1 |

| Physica A: Statistical Mechanics and Its Applications | 0 | 231 | 143 |

Table 1. The Coverage, Commitment and Importance of Different Journals in Regard to “opinion dynamics”: Top Ten by Citation Count of Most Influential Article.

This list attempts to provide two somewhat separate assessments of a journal with regard to “opinion dynamics”. The first is whether it has a substantial body of articles on the topic: Coverage. The second is whether, by the citation levels of the journal generally, “opinion dynamics” models are important to it: Commitment. These journals have been selected on a third dimension, their ability to contribute at least one very influential article to the literature as a whole: Importance.

The resulting patterns are interesting in several ways. Firstly, JASSS appears unique in this sample in being a clearly social science journal rather than a physical science journal or one dealing with instrumental problems like operations research or automatic control. It is an interesting corollary how many “opinion dynamics” models in a physics journal will have been reviewed by social scientists or modellers with a social science orientation at least. This is part of a wider question about whether, for example, physics journals are mainly interested in these models as formal systems rather than as having likely application to real societies. Secondly, 3 journals out of 10 have only a single “opinion dynamics” article – and a further journal has only 2 – which are nonetheless, extremely highly cited relative to such articles as a whole. It is unclear whether this “only one but what a one” pattern has any wider significance. It should also be noted that the most highly cited article in JASSS is four times more highly cited than the next most cited. Only 4 of these journals out of 10 could really be said to have a usable sample of such articles for case study analysis. Thirdly, only 2 journals out of 10 have a significant number of articles sufficiently important that they appear in the top 50 most cited and 5 journals have no “opinion dynamics” articles in their top 50 most cited at all. This makes the point that a journal can have good coverage of the topic and contain at least one highly cited article without “opinion dynamics” necessarily being a commitment of the journal.

Thus it seems that to be a journal contributing at least one influential article to the field as a whole, to have several articles that are amongst the most cited by that journal and to have a non-trivial number of articles overall is unusual. Only one other journal in the top 10 meets all three criteria (International Journal of Physics C). This result is corroborated in Table 2 which carries out the same analysis for all additional journals containing at least one highly cited “opinion dynamics” article (with an arbitrary cut off of at least 100 citations for that article). There prove to be fourteen such journals in addition to the ten above.

| Journal Title | “opinion dynamics” Articles in the Top 50 Most Cited | Most Highly Cited “opinion dynamics” Article Citations | Number of Articles Containing the String “opinion dynamics” |

| Mathematics of Operations Research | 1 | 215 | 2 |

| Information Sciences | 0 | 186 | 14 |

| Physica D: Nonlinear Phenomena | 0 | 182 | 4 |

| Journal of Complex Networks | 1 | 177 | 5 |

| Annual Reviews in Control | 2 | 165 | 4 |

| Information Fusion | 0 | 154 | 11 |

| IEEE Transactions on Control of Network Systems | 3 | 151 | 12 |

| Automatica | 0 | 141 | 32 |

| Public Opinion Quarterly | 0 | 132 | 5 |

| Physical Review E | 0 | 129 | 74 |

| SIAM Journal on Control and Optimization | 0 | 127 | 13 |

| Europhysics Letters | 0 | 116 | 3 |

| Knowledge-Based Systems | 0 | 112 | 5 |

| Scientific Reports | 0 | 111 | 26 |

Table 2. The Coverage, Commitment and Importance of Different Journals in Regard to “opinion dynamics”: All Remaining Distinct Journals whose most important “opinion dynamics” article receives at least 100 citations.

Table 2 confirms the dominance of physical science journals and those solving instrumental problems as opposed to those evidently dealing with the social sciences: A few terms like complex networks are ambivalent in this regard however. Further it confirms the scarcity of journals that simultaneously contribute at least one influential article to the wider field, have a sensibly sized sample of articles on this topic – so that provisional but nonetheless empirical hypotheses might be derived from a case study – and have “opinion dynamics” articles in their top 50 most cited articles as a sign of the importance of the topic to the journal and its readers. To some extent, however, the latter confirmation is an unavoidable artefact of the sampling strategy. As the most cited article becomes less highly cited, the chance it will appear in the top 50 most cited for a particular journal will almost certainly fall unless the journal is very new or generally not highly cited.

As a third independent check, I again used Web of Science to identify all journals which had – somewhat arbitrarily – at least 30 articles on “opinion dynamics”, giving some sense of their contribution. Only two more journals (see Table 3) not already occurring in the two tables above were identified. Generally, this analysis considers only journal articles and not conference proceedings and book chapter serials whose peer review status is less clear/comparable.

| Journal Title | “opinion dynamics” Articles in the Top 50 Most Cited | Most Highly Cited “opinion dynamics” Article Citations | Number of Articles Containing the String “opinion dynamics” |

| Advances in Complex Systems | 5 | 54 | 42 |

| Plos One | 0 | 53 | 32 |

Table 3. The Coverage, Commitment and Importance of Different Journals: All Journals with at Least 30 “opinion dynamics” hits not already listed in Tables 1 and 2.

This cross check shows that while the additional journals do have sample of articles large enough to form the basis for a case study, they either have not yet contributed a really influential article to the wider field – less than half the number of citations of the journals which qualify for Tables 1 and 2, do not have a high commitment to opinion dynamics – in terms of impact within the journal and among its readers – or both.

Before concluding this analysis, it is worth briefly reflecting on what these three criteria jointly tell us – though other criteria could also be used in further research. By sampling on highly cited articles we focus on journals that have managed to go beyond their core readership and influence the field as a whole. There is a danger that journals that have never done this are merely “talking to themselves” and may therefore form a less effective basis for a case study speaking to the field as a whole. By attending to the number of articles in the top 50 for the journal, we get a sense of whether the topic is central (or only peripheral) to that journal/its readership and, again, journals where the topic is central stand a chance of being better case studies than those where it is peripheral. The criteria for having enough articles is simply a practical one for conducting a meaningful case study. Researchers using different methods may disagree about how many instances you need to draw useful conclusions but there is general agreement that it is more than one!

Analysis and Conclusions

The present article was motivated by an attempt to evaluate the claim that JASSS may be parochial and therefore not constitute a suitable basis for provisional hypotheses generated by case study analysis of its articles. Although the argument presented here is clearly rough and ready – and could be improved on by subsequent researchers – it does not appear to support this claim. JASSS actually seems to be one of very few journals – arguably the only social science one – that simultaneously has made at least one really influential contribution to the wider field of opinion dynamics, has a large enough number of articles on the topic for plausible generalisation and has quite a few such articles in its top 50, which shows the importance of the topic to the journal and its wider readership. Unless one wishes to reject case study analysis altogether, there are – in fact – very few other journals on which it can effectively be done for this topic.

But actually, my main conclusion is a wider reflection on peer reviewing, sampling and scientific progress based on reviewer resistance to the case study approach. There are 1386 articles with the search term “opinion dynamics” in Web of Science as of 25.07.22. It is clearly not realistic for one article – or even one book – to analyse all that content, particularly qualitatively. This being so we have to consider what is practical and adequate to generate hypotheses suitable for publication and further development of research along these lines. Case studies of single journals are not the only strategy but do have a recognised academic tradition in methodology (Brown 2008). We could sample randomly from the population of articles but I have never yet seen such a qualitative analysis based on sampling and it is not clear whether it would be any better received by potential reviewers. (In particular, with many journals each having only a few examples of Agent-Based Models, realistically low sampling rates would leave many journals unrepresented altogether which would be a problem if they had distinctive approaches.) Most journals – including JASSS – have word limits and this restricts how much you can report. Qualitative analysis is more drawn-out than quantitative analysis which limits this research style further in terms of practical sample sizes. Both reading whole articles for analysis and writing up the resulting conclusions takes more resources of time and word count. As long as one does not claim that a qualitative analysis from JASSS can stand for all Agent-Based Modelling – but is merely a properly grounded hypothesis for further investigation – and shows ones working properly to support that further investigation, it isn’t really clear why that shouldn’t be sufficient for publication. Particularly as I have now shown that JASSS isn’t notably parochial along several potentially relevant dimensions. If a reviewer merely conjectures that your results won’t generalise, isn’t the burden of proof then on them to do the corresponding analysis and publish it? Otherwise the danger is that we are setting conjecture against actual evidence – however imperfect – and this runs the risk of slowing scientific progress by favouring research compatible with traditionally approved perspectives in publication. It might be useful to revisit the everyday idea of burden of proof in assessing the arguments of reviewers. What does it take in terms of evidence and argument (rather than simply power) for a comment by a reviewer to scientifically require an answer? It is a commonplace that a disproved hypothesis is more valuable to science than a mere conjecture or something that cannot be proven one way or another. One reason for this is that scientific procedure illustrates methodological possibility as well as generating actual results. A sample from JASSS may not stand for all research but it shows how a conclusion might ultimately be reached for all research if the resources were available and the administrative constraints of academic publishing could be overcome.

As I have argued previously (Chattoe-Brown 2022), and has now been pleasingly illustrated (Keijzer 2022), this situation may create an important and distinctive role for RofASSS. It may be valuable to get hypotheses, particularly ones that potentially go against the prevailing wisdom, “out there” so they can subsequently be tested more rigorously rather than having to wait until the framer of the hypothesis can meet what may be a counsel of perfection from peer reviewers. Another issue with reviewing is a tendency to say what will not do rather than what will do. This rather the puts the author at the mercy of reviewers during the revision process. RofASSS can also be used to hive off “contextual” analyses – like this one regarding what it might mean for a journal to be parochial – so that they can be developed in outline for the general benefit of the Agent-Based Modelling community – rather than having to add length to specific articles depending on the tastes of particular reviewers.

Finally, as should be obvious, I have only suggested that JASSS is not parochial in regard to articles involving the string “opinion dynamics”. However, I have also illustrated how this kind of analysis could be done systematically for different topics to justify the claim that a particular journal can serve as a reasonable basis for a case study.

Acknowledgements

This analysis was funded by the project “Towards Realistic Computational Models Of Social Influence Dynamics” (ES/S015159/1) funded by ESRC via ORA Round 5.

References

Brown, Patricia Anne (2008) ‘A Review of the Literature on Case Study Research’, Canadian Journal for New Scholars in Education/Revue Canadienne des Jeunes Chercheures et Chercheurs en Éducation, 1(1), July, pp. 1-13, https://journalhosting.ucalgary.ca/index.php/cjnse/article/view/30395.

Chattoe-Brown, E. (2022) ‘If You Want to Be Cited, Don’t Validate Your Agent-Based Model: A Tentative Hypothesis Badly in Need of Refutation’, Review of Artificial Societies and Social Simulation, 1st Feb 2022. https://rofasss.org/2022/02/01/citing-od-models

Hauke, Jonas, Lorscheid, Iris and Meyer, Matthias (2017) ‘Recent Development of Social Simulation as Reflected in JASSS Between 2008 and 2014: A Citation and Co-Citation Analysis’, Journal of Artificial Societies and Social Simulation, 20(1), 5. https://www.jasss.org/20/1/5.html. doi:10.18564/jasss.3238

Keijzer, M. (2022) ‘If You Want to be Cited, Calibrate Your Agent-Based Model: Reply to Chattoe-Brown’, Review of Artificial Societies and Social Simulation, 9th Mar 2022. https://rofasss.org/2022/03/09/Keijzer-reply-to-Chattoe-Brown

Meyer, Matthias, Lorscheid, Iris and Troitzsch, Klaus G. (2009) ‘The Development of Social Simulation as Reflected in the First Ten Years of JASSS: A Citation and Co-Citation Analysis’, Journal of Artificial Societies and Social Simulation, 12(4), 12,. https://www.jasss.org/12/4/12.html.

Yin, R. K. (2009) Case Study Research: Design and Methods, fourth edition (Thousand Oaks, CA: Sage).

Chattoe-Brown, E. (2022) Is The Journal of Artificial Societies and Social Simulation Parochial? What Might That Mean? Why Might It Matter? Review of Artificial Societies and Social Simulation, 10th Sept 2022. https://rofasss.org/2022/09/10/is-the-journal-of-artificial-societies-and-social-simulation-parochial-what-might-that-mean-why-might-it-matter/

© The authors under the Creative Commons’ Attribution-NoDerivs (CC BY-ND) Licence (v4.0)