Lessons from a session at SocSimFest 2023

By Gary Polhill and Juliette Rouchier

Bruce Edmonds organized a stimulating session at the SocSimFest held 15-16 March 2023. Entitled, “How to do wrong using Social Simulation – as a result of arrogance, laziness or ill intent.” One of the presentations (Rouchier 2023) covered the modelling used to justify lockdowns in various countries. This talk concentrated on the harms lockdowns caused and suggested that they were unnecessary; a discourse that is not the most present in the media and takes an alternative view to the idea that a scientific consensus exists in real-time and could lead to the best decision. There was some ‘vigorous’ debate afterwards, but here we expand on an important point that came out of that debate: Modelling the effects of Covid to inform policy on managing the disease requires much more than epidemiological modelling. We might speculate, then, whether in general, modelling for policy intervention means ensuring greater coverage of the wider system than might be deemed strictly necessary for the immediate policy question in hand. Though such speculation has apparent consequences for model complicatedness that go beyond Sun et al.’s (2016) ‘Medawar zone’ for empirical ABM, there is an interpretation of this requirement for extended coverage that is also compatible with preferences for simpler models.

Going beyond the immediate case of Covid would require the identification of commonalities in the processes of decision making that could be extrapolated to other situations. We are less interested in that here than making the case that simulation for policy analysis in the context of Covid entails greater coverage of the system than might be expected given the immediate questions in hand. The expertise of Rouchier means our focus is primarily on the experience of Covid in France. Generalisation of the principle to wider coverage beyond this case is a matter of conjecture that we propose making.

Handling Covid: an evaluation that is still in progress

Whether governments were right or wrong to implement lockdowns of varying severity is a matter that will be for historians to debate. During that time various researchers developed models, including agent-based models, that were used to advise policymakers on handling an emergency situation predicated on higher rates of mortality and hospitalisation.[1] Assessing the effectiveness of the lockdowns empirically would require us to be able to collect data from parallel universes in which they were not implemented. The fact that we cannot do this leaves us, as Rouchier pointed out, either comparing outcomes with models’ predictions – which is problematic if the models are not trusted – or comparing outcomes across countries with different lockdown policies – which has so far been inconclusive even if it weren’t problematic because of differences in culture and geography from one nation to another. Such comparison will nevertheless be the most fruitful in time, although the differences of implementation among countries will doubtless induce long discussions about the most important factors to consider for defining relevant Non-Pharmaceutical Interventions (NPI).[2]

The effects of the lockdowns themselves on people’s mental and physical health, child development, and on the economy and working practices, are also the subject of emerging data post-lockdown. Some of these consequences have been severe – not least for the individuals concerned. Though not germane to the central argument of this brief document, it is worth noting that the same issue with unobservable parallel universes means that scientific rather than historical assessment of whether these outcomes are better or worse than any outcomes for those individuals and society at large in the absence of lockdowns is also impossible.

For our purposes, the most significant aspect of this second point is that the discussion has arisen after the epidemic emergency: First, it is noteworthy that these matters could perfectly well have been considered in models during the crisis. Indeed, contrasting the positive effect (saving lives or saving a public service) with negative effects (children’s withdrawal from education,[3] increasing psychological distress, not to mention domestic abuse – Usta et al. 2021) is typically what cost-benefit analysis, based on multi-criteria modelling, is supposed to elicit (Roy, 1996). In modelling for public policy decision-making, it is particularly clear that there is no universally ‘superior’ or ‘optimum’ indicator to be used for comparing options; but several indicators to evaluate diverse alternative policies. A discussion about the best decision for a population has to be based on the best description of possible policies and their evaluations according to the chosen indicators (Pluchinotta et al., 2022). This means that a hierarchy of values has to be made explicit to justify the hierarchy of most important indicators. During the Covid crisis one question that could have been asked (should it not have been) is: who is the most vulnerable population to protect? Is it old people because of disease or young people because of potential threats to their future chances in life?

Second, it is clear that this answer could vary in time with information and the dynamics of variant of Covid. For example, as soon as Omicron was announced by South Africa’s doctors, it was said to be less dangerous than earlier variants.[4] In that sense, the discussion of balancing priorities, in a dynamic way, in this historical period is very typical of what could also be central in other public discussions where the whole population is facing a highly uncertain future, and where the evolution of knowledge is rapid. But it is difficult to know in advance which indicators should be considered since some signals can be very weak at some point in time, but then be confirmed as highly relevant later on – essentially this is the problem of the omitted-variable bias.

The discussion about risks to mental health was vivid in 2020 already: some psychologists were soon showing the risk for people with mental health issues or women with violent husbands;[5] while the discussion about effects on children started early in 2020 (Singh et al., 2020). However this issue only started to be considered publicly by the French government a year and a half later. One interpretation of the time differential is that the signal seemed too weak for non-specialists early on, when the specialists had already seen the disturbing signs.

In science, we have no definitive rule to decide when a weak signal at present will later turn out to be truly significant. Rather, it is ‘society’ as a whole that decides on the value of different indicators (sometimes only with the wisdom of hindsight) and scientists should provide knowledge on these. This goes back to classical questions of hierarchy of values about the diverse stakes people hold in questions that recur perennially in decision science.

Modelling for policy making: tension between complexity and elegance?

Edmonds (2022) presented a paper at SSC 2022 outlining four ‘levels’ of rigour needed when conducting social simulation exercises, reserving the highest level for using agent-based models to inform public policy. Page limitations for conference submissions meant he was unable to articulate in the paper as full a list of the stipulations for rigour in the fourth level as he was for the other three. However, Rouchier’s talk at the SocSimFest brought into sharp focus that at least one of those stipulations is that models of public policy should always have broader coverage of the system than is strictly necessary for the immediate question in hand. This has the strange-seeming consequence that exclusively epidemiological models are inadequate to the task of modelling how a contagious illness should be controlled. For any control measure that is proposed, such a stipulation entails that the model be capable of exploring not only the effect on disease spread, but also potential wider effects of relevance to societal matters generally in the domain of other government departments: such as, energy, the environment, business, justice, transportation, welfare, agriculture, immigration, and international relations.

The conjecture that for any modelling challenge in complex or wicked systems, thorough policy analysis entails broader system coverage than the immediate problem in hand (KIDS-like – see Edmonds & Moss 2005), is controversial for those who like simple, elegant, uncomplicated models (KISS-like). Worse than that, while Sun et al. (2016), for example, acknowledge that the Medawar zone for empirical models is at a higher level of complicatedness than for theoretical models, the coverage implied by this conjecture is broader still. The level of complicatedness implied will also be controversial for those who don’t mind complex, complicated models with large numbers of parameters. It suggests that we might need to model ‘everything’, or that policy models are then too complicated for us to understand, and as a consequence, perhaps using simulations to analyse policy scenarios is inappropriate. The following considers each of these objections in turn with a view to developing a more nuanced analysis of the implications of such a conjecture.

Modelling ‘everything’ is a matter that is the easiest to reject as a necessary implication of modelling ‘more things’. Modelling, say, the international relations implications of proposed national policy on managing a global pandemic, does not mean one is modelling the lifecycle of extremophile bacteria, or ocean-atmosphere interactions arising from climate change, or the influence of in-home displays on domestic energy consumption, to choose a few random examples of a myriad things that are not modelled. It is not even clear what modelling ‘everything’ really means – phenomena in social and environmental systems can be modelled at diverse levels of detail, at scales from molecular to global. Fundamentally, it is not even clear that we have anything like a perception of ‘everything’, and hence no basis for representing ‘everything’ in a model. Further, the Borges argument[6] holds in that having a model that would be the same as reality makes it useless to study as it is then wiser to study reality directly. Neither universal agreement nor objective criteria[7] exist for the ‘correct’ level of complexity and complication at which to model phenomena, but failing to engage with a broader perspective on the systemic effects of phenomena leaves one open to the kind of excoriating criticism exemplified by Keen’s (2021) attack on economists’ analysis of climate change.

At the other end of the scale, doing no modelling at all is also a mistake. As Polhill and Edmonds (2023) argue, leaving simulation models out of policy analysis essentially makes the implicit assumption that human cognition is adequate to the task of deciding on appropriate courses of action facing a complex situation. There is no reason (besides hubris) to believe that this is necessarily the case, and plenty of evidence that it is not. Not least of such evidence is that many of the difficult decisions we now face around such things as managing climate change and biodiversity have been forced upon us by poor decision-making in the past.

Cognitive constraints and multiple modellers

This necessity to consider many dimensions of social life within models that are ‘close enough’ to the reality to convince decision-makers induces a risk of ‘over’-complexity. Its main default is the building of models that are too complicated for us to understand. This is a valid concern in the sense that building an artificial system that, though simpler than the real world, is still beyond human comprehension, hardly seems a worthwhile activity. The other concern is that of the knowledge needed by the modeller: how can one person be able to imagine an integrative model which includes (for example) employment, transportation, food, schools, international economy, and any other issue which is needed for a serious analysis of the consequences of policy decisions?

Options that still entail broader coverage but not a single overcomplicated integrated model are: 1/ step-by-step increase in the complexity of the model in a community of practitioners; 2/ confrontation of different simple models with different hypotheses and questions; 3/ superposition and integration of simple models into one, through a serious work on the convergence of ontologies (with a nod to Voinov and Shugart’s (2013) warnings).

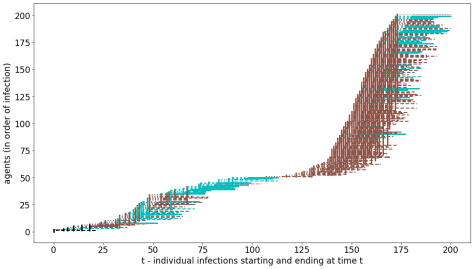

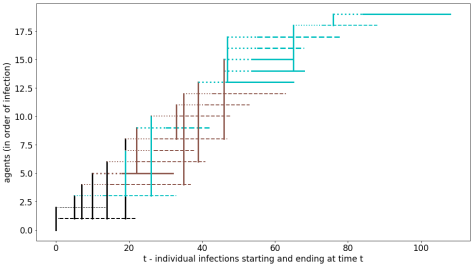

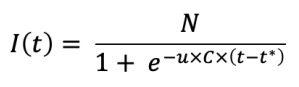

- To illustrate this first approach, let us stay with the case of the epidemic model. One can start with an epidemiological simulation where we fit to the fact that if we tell people to stay at home then we will cut hospitalizations by enough that health services will not be overwhelmed. But then we are worried that this might have a negative impact on the economy. So we bring in modelling components that simulate all four combinations of person/business-to-person/business transactions, and this shows that if we pay businesses to keep employees on their books, we have a chance of rebooting the economy after the pandemic is over.[8] But then we are concerned that businesses might lie about who their employees are, that office-workers who can continue to work at home are privileged over those with other kinds of job, that those with a child-caring role in their households are disadvantaged in their ability to work at home if the schools are closed, and that the mental health of those who live alone is disproportionately impacted through cutting off their only means of social intercourse. And so more modelling components are brought in. In a social context, this incremental addition of the components of a complicated model may mean it is more comprehensible to the team of modellers.

If the policy maker really wants to increase her capacity to understand her possible actions with models, she would also have to make sure to invite several researchers for each modelled aspect, as no single social science is free of controversy, and the discussions about consequences should rely on contradictory theories. If a complex model has to be built, it can indeed propose different hypotheses on behaviours, functioning of economy, sanitary risks depending on the type of encounter.[9] It is then more of a modelling ‘framework’ with several options for running various different specific models with different implementation options. One advantage of modelling that applies even in cases where Borges argument applies, is that testing out different hypotheses is harmless for humans (unlike empirical experiments) and can produce possible futures, seen as trajectories that can then be evaluated in real time with relevant indicators. With a serious group of modellers and statisticians, providing contradicting views, not only can the model be useful for developing prospective views, but also the evaluation of hypotheses could be done rapidly.

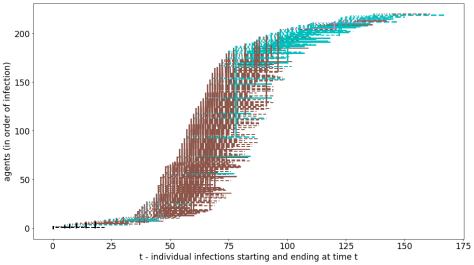

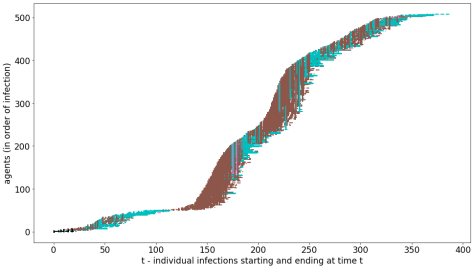

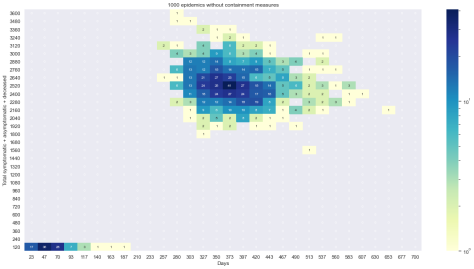

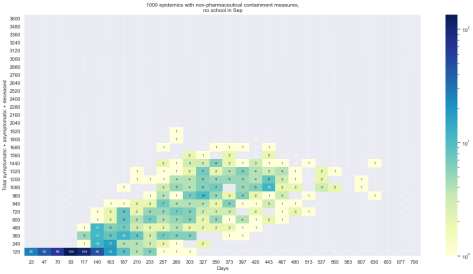

- The CoVprehension Collective (2020) showed another approach, more fluid in its organisation. The idea is “one question, one model”, and the constraint is to have a pedagogic result where a simple phenomenon would be illustrated. Different modellers could realise one or several models on simple issues, so that to explain one simple phenomenon, paradox or show a tautological affirmation. In the process, the CoVprehension team would create moving sub-teams, associate on one specific issue and propose their hypotheses and results in a very simple manner. Such a protocol was purely oriented for explanation to the public, but the idea would be to organise a similar dynamic for policy makers. The system is cheap (it was self-organised with researchers and engineers, with zero funding but their salary) and it sustained lively discussions, with different points of view. Questions could go from differences between possible NPI, with an algorithmic description of these NPI that could make the understanding of processes more precise, to an explanation of the reason why French supermarkets were missing toilet paper. Twenty questions were answered in two months, thus indicating that such a working dynamic is feasible in real-time and provides useful and interesting inputs to discussion.

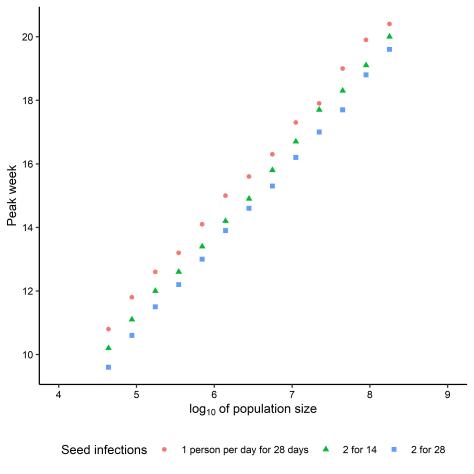

- To avoid too complicated a model, the fusion of both approaches could also be conceived: the addition of dimensions to a large central model could be first tested through simple models, the main process of explanation could be found and this process reproduced within the theoretical framework of the large model. This would constitute both a production of diversity of points of view and models and the aggregation of all points of view in one large model. The fact that the model should be large is important, as ‘size matters’ in diffusion models (e.g. Gotts & Polhill 2010), and thus simple, small models would benefit from this work as well.

As some modellers like complex models (and can think with the help of these models) and others rely on simple stories to increase their understanding of the world, only the creation of an open community of diverse specialists and modellers, KISS as well as KIDS, such a collective step-by-step elaboration could resolve the central problem that ‘too complicated to understand’ is a relative, rather than absolute, assessment. One very important prerequisite of such collaboration is that there is genuine ‘horizontality’ of the community: where each participant is listened to seriously whatever their background, which can be an issue in interdisciplinary work, especially involving people of mixed career stage. Be that as it may, the central conjecture remains: agent-based modelling for policy analysis should be expected to involve even more complicated (assemblages of) models than empirical agent-based modelling.

Endnotes

[1] This point is the one that is the most disputed ex-post in France, where lockdowns were justified (as in other countries) to “protect hospitals”. In France, the idea was not to avoid deaths of older people (90% of deaths were people older than 60, this demographic being 20% of the population), but to avoid hospitals being overwhelmed with Covid cases taking the place of others. In France, the official data regarding hospital activity states that Covid cases represented 2% of hospitalizations and 5% of Intensive Care Unit (ICU) utilizations. Further, hospitals halved their workload from March to May 2020 because of almost all surgery being blocked to keep ICUs free. (In October-December 2020, although the epidemic was more significant at that time, the same decision was not taken). Arguably, 2% of 50% not an increase that should destroy a functioning system – https://www.atih.sante.fr/sites/default/files/public/content/4144/aah_2020_analyse_covid.pdf – page 2. Fixing dysfunction in the UK’s National Health Services has been a long-standing, and somewhat tedious, political and academic debate in the country for years, even before Covid (e.g. Smith 2007; Mannion & Braithwaite 2012; Pope & Burnes 2013; Edwards & Palmer 2019).

[2] An interesting difference that French people heard about was that in the UK, people could wander on the beaches during lockdowns, whereas in France it was forbidden to go to any natural area – indeed, it was forbidden to go further than one kilometre from home. Whereas, in fact, in the UK the lockdown restrictions were a ‘devolved matter’, with slightly different policies in each of the UK’s four member nations, though very similar legislation. In England, Section 6 paragraph (1) of The Health Protection (Coronavirus, Restrictions) (England) Regulations 2020 stated that “no person may leave the place where they are living without reasonable excuse”, with paragraph (2) covering examples of “reasonable excuses” including for exercise, obtaining basic necessities, and accessing public services. Similar wording was used by other devolved nations. None of the regulations stipulated any maximum distance from a person’s residence that these activities had to take place – interpretation of the UK’s law is based on the behaviour of the ‘reasonable person’ (the so-called ‘man on the Clapham omnibus’ – see Łazowski 2021). However, differing interpretations of what ‘resonable people’ would do between the citizenry and the constabulary led to fixed penalty notices being issued for taking exercise more than five miles (eight kilometres) from home – e.g. https://www.theguardian.com/uk-news/2021/jan/09/covid-derbyshire-police-to-review-lockdown-fines-after-walkers-given-200-penalties In Scotland, though the Statutory Instrument makes no mention of any distance, people were ‘given guidance’ not to travel more than five miles from home for leisure and recreation, and were still advised to stay “within their local area” after this restriction was lifted (see https://www.gov.scot/news/travel-restrictions-lifted/).

[3] A problem which seems to be true in various countries https://www.unesco.org/en/articles/new-academic-year-begins-unesco-warns-only-one-third-students-will-return-school

https://www.kff.org/other/report/kff-cnn-mental-health-in-america-survey/

https://eu.usatoday.com/in-depth/news/health/2023/05/15/school-avoidance-becomes-crisis-after-covid/11127563002/#:~:text=School%20avoidant%20behavior%2C%20also%20called,since%20the%20COVID%2D19%20pandemic

https://www.bbc.com/news/health-65954131

[4] https://www.cityam.com/omicron-mild-compared-to-delta-south-african-doctors-say/

[5] https://www.terrafemina.com/article/coronavirus-un-psy-alerte-sur-les-risques-du-confinement-pour-la-sante-mentale_a353002/1

[6] In 1946, in El hacedor, Borges described a country where the art of building maps is so excessive in the need for details that the whole country is covered by the ideal map. This leads to obvious troubles and the disappearance of geographic science in this country.

[7] See Brewer et al. (2016) if the Akaike Information Criterion is leaping to your mind at this assertion.

[8] Although this assumption might not be stated that way anymore, as the hypothesis that many parts of the economy would hugely suffer started to reveal its truth even before the end of the crisis: a problem that had only been anticipated by a few prominent economists (e.g. Boyer, 2020). This failure shows mainly that the description that most economists make of the economy is too simplistic – as often reproached – to be able to anticipate massive disruptions. Everywhere in the world the informal sector was almost completely stopped as people could neither work in their job nor meet for information market exchange, which causes misery for a huge part of the earth population, among the most vulnerable (ILO, 2022).

[9] A real issue that became obvious is that the nosocomial infections are (still) extremely important in hospitals, as the evaluation of the number of infections in hospitals for Covid19 are estimated to be 20 to 40% during the first epidemic (Abbas et al. 2021).

Acknowledgements

GP’s work is supported by the Scottish Government Rural and Environment Science and Analytical Services Division (project reference JHI-C5-1).

References

Abbas, M., Nunes, T. R., Martischang, R., Zingg, W., Iten, A., Pittet, D. & Harbarth, S. (2021) Nosocomial transmission and outbreaks of coronavirus disease 2019: the need to protect both patients and healthcare workers. Antimicrobial Resistance & Infection Control 10, 7. doi:10.1186/s13756-020-00875-7

Boyer, R. (2020) Les capitalismes à l’épreuve de la pandémie, La découverte, Paris.

Brewer, M., Butler, A. & Cooksley, S. L. (2016) The relative performance of AIC, AICC and BIC in the presence of unobserved heterogeneity. Methods in Ecology and Evolution 7 (6), 679-692. doi:10.1111/2041-210X.12541

the CoVprehension Collective (2020) Understanding the current COVID-19 epidemic: one question, one model. Review of Artificial Societies and Social Simulation, 30th April 2020. https://rofasss.org/2020/04/30/covprehension/

Edmonds, B. (2022) Rigour for agent-based modellers. Presentation to the Social Simulation Conference 2022, Milan, Italy. https://cfpm.org/rigour/

Edmonds, B. & Moss, S. (2005) From KISS to KIDS – an ‘anti-simplistic’ modelling approach. Lecture Notes in Artificial Intelligence 3415, pp. 130-144. doi:10.1007/978-3-540-32243-6_11

Edwards, N. & Palmer, B. (2019) A preliminary workforce plan for the NHS. British Medical Journal 365 (8203), I4144. doi:10.1136/bmj.l4144

Gotts, N. M. & Polhill, J. G. (2010) Size matters: large-scale replications of experiments with FEARLUS. Advances in Complex Systems 13 (04), 453-467. doi:10.1142/S0219525910002670

ILO Brief (2020) Impact of lockdown measures on the informal economy, https://www.ilo.org/global/topics/employment-promotion/informal-economy/publications/WCMS_743523/lang–en/index.htm

Keen, S. (2021) The appallingly bad neoclassical economics of climate change. Globalizations 18 (7), 1149-1177. doi:10.1080/14747731.2020.1807856

Łazowski, A. (2021) Legal adventures of the man on the Clapham omnibus. In Urbanik, J. & Bodnar, A. (eds.) Περιμένοντας τους Bαρβάρους. Law in a Time of Constitutional Crisis: Studies Offered to Mirosław Wyrzykowski. C. H. Beck, Munich, Germany, pp. 415-426. doi:10.5771/9783748931232-415

Mannion, R. & Braithwaite, J. (2012) Unintended consequences of performance measurement in healthcare: 20 salutary lessons from the English National Health Service. Internal Medicine Journal 42 (5), 569-574. doi:10.1111/j.1445-5994.2012.02766.x

Pluchinotta I., Daniell K.A., Tsoukiàs A. (2002), “Supporting Decision Making within the Policy Cycle: Techniques and Tools”, In M. Howlett (ed.), Handbook of Policy Tools, Routledge, London, 235 – 244. https://doi.org/10.4324/9781003163954-24.

Polhill, J. G. & Edmonds, B. (2023) Cognition and hypocognition: Discursive and simulation-supported decision-making within complex systems. Futures 148, 103121. doi:10.1016/j.futures.2023.103121

Pope, R. & Burnes, B. (2013) A model of organisational dysfunction in the NHS. Journal of Health Organization and Management 27 (6), 676-697. doi:10.1108/JHOM-10-2012-0207

Rouchier, J. (2023) Presentation to SocSimFest 23 during session ‘How to do wrong using Social Simulaion – as a result of arrogance, laziness or ill intent’. https://cfpm.org/slides/JR-Epi+newTINA.pdf

Roy, B. (1996) Multicriteria methodology for decision analysis, Kluwer Academic Publishers.

Singh, S, Roy, D., Sinha, K., Parveen, S., Sharma G. & Joshic, G. (2020) Impact of COVID-19 and lockdown on mental health of children and adolescents: A narrative review with recommendations, Psychiatry Research. 2020 Nov; 293: 113429, 10.1016/j.psychres.2020.113429

Smith, I. (2007). Breaking the dysfunctional dynamics. In: Building a World-Class NHS. Palgrave Macmillan, London, pp. 132-177. doi:10.1057/9780230589704_5

Sun, Z., Lorscheid, I., Millington, J. D., Lauf, S., Magliocca, N. R., Groeneveld, J., Balbi, S., Nolzen, H., Müller, B., Schulze, J. & Buchmann, C. M. (2016) Simple or complicated agent-based models? A complicated issue. Environmental Modelling & Software 86, 56-67. doi:10.1016/j.envsoft.2016.09.006

Usta, J., Murr, H. & El-Jarrah, R. (2021) COVID-19 lockdown and the increased violence against women: understanding domestic violence during a pandemic. Violence and Gender 8 (3), 133-139. doi:10.1089/vio.2020.0069

Voinov, A. & Shugart, H. H. (2013) ‘Integronsters’, integral and integrated modeling. Environmental Modelling & Software 39, 149-158. doi:10.1016/j.envsoft.2012.05.014

Polhill, G. and Rouchier, J. (2023) Policy modelling requires a multi-scale, multi-criteria and diverse-framing approach. Review of Artificial Societies and Social Simulation, 31 Jul 2023. https://rofasss.org/2023/07/31/policy-modelling-necessitates-multi-scale-multi-criteria-and-a-diversity-of-framing

© The authors under the Creative Commons’ Attribution-NoDerivs (CC BY-ND) Licence (v4.0)