By Dino Carpentras1, Edmund Chattoe-Brown2*, Bruce Edmonds3, Cesar García-Diaz4, Christian Kammler5, Anna Pagani6 and Nanda Wijermans7

*Corresponding author, 1Centre for Social Issues Research, University of Limerick, 2School of Media, Communication and Sociology, University of Leicester, 3Centre for Policy Modelling, Manchester Metropolitan University, 4Department of Business Administration, Pontificia Universidad Javeriana, 5Department of Computing Science, Umeå University, 6Laboratory on Human-Environment Relations in Urban Systems (HERUS), École Polytechnique Fédérale de Lausanne (EPFL), 7Stockholm Resilience Centre, Stockholm University.

Introduction

This round table was convened to advance and improve the use of experimental methods in Agent-Based Modelling, in the hope that both existing and potential users of the method would be able to identify steps towards this aim[i]. The session began with a presentation by Bruce Edmonds (http://cfpm.org/slides/experiments%20and%20ABM.pptx) whose main argument was that the traditional idea of experimentation (controlling extensively for the environment and manipulating variables) was too simplistic to add much to the understanding of the sort of complex systems modelled by ABMs and that we should therefore aim to enhance experiments (for example using richer experimental settings, richer measures of those settings and richer data – like discussions between participants as well as their behaviour). What follows is a summary of the main ideas discussed organised into themed sections.

What Experiments Are

Defining the field of experiments proved to be challenging on two counts. The first was that there are a number of labels for potentially relevant approaches (experiments themselves – for example, Boero et al. 2010, gaming – for example, Tykhonov et al. 2008, serious games – for example Taillandier et al. 2019, companion/participatory modelling – for example, Ramanath and Gilbert 2004 and web based gaming – for example, Basole et al. 2013) whose actual content overlap is unclear. Is it the case that a gaming approach is generally more in line with the argument proposed by Edmonds? How can we systematically distinguish the experimental content of a serious game approach from a gaming approach? This seems to be a problem in immature fields where the labels are invented first (often on the basis of a few rather divergent instances) and the methodology has to grow into them. It would be ludicrous if we couldn’t be sure whether a piece of research was survey based or interview based (and this would radically devalue the associated labels if it were so.)

The second challenge is also more general in Agent-Based Modelling which is the same labels being used differently by different researchers. It is not productive to argue about which uses are correct but it is important that the concepts behind the different uses are clear so a common scheme of labelling might ultimately be agreed. So, for example, experiment can be used (and different round table participants had different perspectives on the uses they expected) to mean laboratory experiments (simplified settings with human subjects – again see, for example, Boero et al. 2010), experiments with ABMs (formal experimentation with a model that doesn’t necessarily have any empirical content – for example, Doran 1998) and natural experiments (choice of cases in the real world to, for example, test a theory – see Dinesen 2013).

One approach that may help with this diversity is to start developing possible dimensions of experimentation. One might be degree of control (all the way from very stripped down behavioural laboratory experiments to natural situations where the only control is to select the cases). Another might be data diversity: From pure analysis of ABMs (which need not involve data at all), through laboratory experiments that record only behaviour to ethnographic collection and analysis of diverse data in rich experiments (like companion modelling exercises.) But it is important for progress that the field develops robust concepts that allow meaningful distinctions and does not get distracted into pointless arguments about labelling. Furthermore, we must consider the possible scientific implications of experimentation carried out at different points in the dimension space: For example, what are the relative strengths and limitations of experiments that are more or less controlled or more or less data diverse? Is there a “sweet spot” where the benefit of experiments is greatest to Agent-Based Modelling? If so, what is it and why?

The Philosophy of Experiment

The second challenge is the different beliefs (often associated with different disciplines) about the philosophical underpinnings of experiment such as what we might mean by a cause. In an economic experiment, for example, the objective may be to confirm a universal theory of decision making through displayed behaviour only. (It is decisions described by this theory which are presumed to cause the pattern of observed behaviour.) This will probably not allow the researcher to discover that their basic theory is wrong (people are adaptive not rational after all) or not universal (agents have diverse strategies), or that some respondents simply didn’t understand the experiment (deviations caused by these phenomena may be labelled noise relative to the theory being tested but in fact they are not.)

By contrast qualitative sociologists believe that subjective accounts (including accounts of participation in the experiment itself) can be made reliable and that they may offer direct accounts of certain kinds of cause: If I say I did something for a certain reason then it is at least possible that I actually did (and that the reason I did it is therefore its cause). It is no more likely that agreement will be reached on these matters in the context of experiments than it has been elsewhere. But Agent-Based Modelling should keep its reputation for open mindedness by seeing what happens when qualitative data is also collected and not just rejecting that approach out of hand as something that is “not done”. There is no need for Agent-Based Modelling blindly to follow the methodology of any one existing discipline in which experiments are conducted (and these disciplines often disagree vigorously on issues like payment and deception with no evidence on either side which should also make us cautious about their self-evident correctness.)

Finally, there is a further complication in understanding experiments using analogies with the physical sciences. In understanding the evolution of a river system, for example, one can control/intervene, one can base theories on testable micro mechanisms (like percolation) and one can observe. But there is no equivalent to asking the river what it intends (whether we can do this effectively in social science or not).[ii] It is not totally clear how different kinds of data collection like these might relate to each other in the social sciences, for example, data from subjective accounts, behavioural experiments (which may show different things from what respondents claim) and, for example, brain scans (which side step the social altogether.) This relationship between different kinds of data currently seems incompletely explored and conceptualised. (There is a tendency just to look at easy cases like surveys versus interviews.)

The Challenge of Experiments as Practical Research

This is an important area where the actual and potential users of experiments participating in the round table diverged. Potential users wanted clear guidance on the resources, skills and practices involved in doing experimental work (and see similar issues in the behavioural strategy literature, for example, Reypens and Levine 2018). At the most basic level, when does a researcher need to do an experiment (rather than a survey, interviews or observation), what are the resource requirements in terms of time, facilities and money (laboratory experiments are unusual in often needing specific funding to pay respondents rather than substituting the researcher working for free) what design decisions need to be made (paying subjects, online or offline, can subjects be deceived?), how should the data be analysed (how should an ABM be validated against experimental data?) and so on.[iii] (There are also pros and cons to specific bits of potentially supporting technology like Amazon Mechanical Turk, Qualtrics and Prolific, which have not yet been documented and systematically compared for the novice with a background in Agent-Based Modelling.) There is much discussion about these matters in the traditional literatures of social sciences that do experiments (see, for example, Kagel and Roth 1995, Levine and Parkinson 1994 and Zelditch 2014) but this has not been summarised and tuned specifically for the needs of Agent-Based Modellers (or published where they are likely to see it).

However, it should not be forgotten that not all research efforts need this integration within the same project, so thinking about the problems that really need it is critical. Nonetheless, triangulation is indeed necessary within research programmes. For instance, in subfields such as strategic management and organisational design, it is uncommon to see an ABM integrated with an experiment as part of the same project (though there are exceptions, such as Vuculescu 2017). Instead, ABMs are typically used to explore “what if” scenarios, build process theories and illuminate potential empirical studies. In this approach, knowledge is accumulated instead through the triangulation of different methodologies in different projects (see Burton and Obel 2018). Additionally, modelling and experimental efforts are usually led by different specialists – for example, there is a Theoretical Organisational Models Society whose focus is the development of standards for theoretical organisation science.

In a relatively new and small area, all we often have is some examples of good practice (or more contentiously bad practice) of which not everyone is even aware. A preliminary step is thus to see to what extent people know of good practice and are able to agree that it is good (and perhaps why it is good).

Finally, there was a slightly separate discussion about the perspectives of experimental participants themselves. It may be that a general problem with unreal activity is that you know it is unreal (which may lead to problems with ecological validity – Bornstein 1999.) On the other hand, building on the enrichment argument put forward by Edmonds (above), there is at least anecdotal observational evidence that richer and more realistic settings may cause people to get “caught up” and perhaps participate more as they would in reality. Nonetheless, there are practical steps we can take to learn more about these phenomena by augmenting experimental designs. For example we might conduct interviews (or even group discussions) before and after experiments. This could make the initial biases of participants explicit and allow them to self-evaluate retrospectively the extent to which they got engaged (or perhaps even over-engaged) during the game. The first such questionnaire could be available before attending the experiment, whilst another could be administered right after the game (and perhaps even a third a week later). In addition to practical design solutions, there are also relevant existing literatures that experimental researchers should probably draw on in this area, for example that on systemic design and the associated concept of worldviews. But it is fair to say that we do not yet fully understand the issues here but that they clearly matter to the value of experimental data for Agent-Based Modelling.[iv]

Design of Experiments

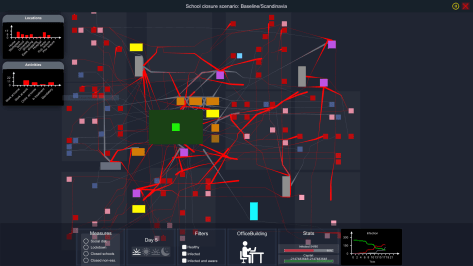

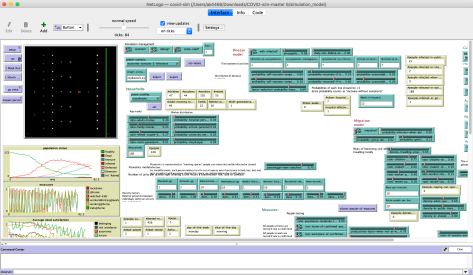

Something that came across strongly in the round table discussion as argued by existing users of experimental methods was the desirability of either designing experiments directly based on a specific ABM structure (rather than trying to use a stripped down – purely behavioural – experiment) or mixing real and simulated participants in richer experimental settings. In line with the enrichment argument put forward by Edmonds, nobody seemed to be using stripped down experiments to specify, calibrate or validate ABM elements piecemeal. In the examples provided by round table participants, experiments corresponding closely to the ABM (and mixing real and simulated participants) seemed particularly valuable in tackling subjects that existing theory had not yet really nailed down or where it was clear that very little of the data needed for a particular ABM was available. But there was no sense that there is a clearly defined set of research designs with associated purposes on which the potential user can draw. (The possible role of experiments in supporting policy was also mentioned but no conclusions were drawn.)

Extracting Rich Data from Experiments

Traditional experiments are time consuming to do, so they are frequently optimised to obtain the maximum power and discrimination between factors of interest. In such situations they will often limit their data collection to what is strictly necessary for testing their hypotheses. Furthermore, it seems to be a hangover from behaviourist psychology that one does not use self-reporting on the grounds that it might be biased or simply involve false reconstruction (rationalisation). From the point of view of building or assessing ABMs this approach involves a wasted opportunity. Due to the flexible nature of ABMs there is a need for as many empirical constraints upon modelling as possible. These constraints can come from theory, evidence or abstract principles (such as simplicity) but should not hinder the design of an ABM but rather act as a check on its outcomes. Game-like situations can provide rich data about what is happening, simultaneously capturing decisions on action, the position and state of players, global game outcomes/scores and what players say to each other (see, for example, Janssen et al. 2010, Lindahl et al. 2021). Often, in social science one might have a survey with one set of participants, interviews with others and longitudinal data from yet others – even if these, in fact, involve the same people, the data will usually not indicate this through consistent IDs. When collecting data from a game (and especially from online games) there is a possibility for collecting linked data with consistent IDs – including interviews – that allows for a whole new level of ABM development and checking.

Standards and Institutional Bootstrapping

This is also a wider problem in newer methods like Agent-Based Modelling. How can we foster agreement about what we are doing (which has to build on clear concepts) and institutionalise those agreements into standards for a field (particularly when there is academic competition and pressure to publish).[v] If certain journals will not publish experiments (or experiments done in certain ways) what can we do about that? JASSS was started because it was so hard to publish ABMs. It has certainly made that easier but is there a cost through less publication in other journals? See, for example, Squazzoni and Casnici (2013). Would it have been better for the rigour and wider acceptance of Agent-Based Modelling if we had met the standards of other fields rather than setting our own? This strategy, harder in the short term, may also have promoted communication and collaboration better in the long term. If reviewing is arbitrary (reviewers do not seem to have a common view of what makes an experiment legitimate) then can that situation be improved (and in particular how do we best go about that with limited resources?) To some extent, normal individualised academic work may achieve progress here (researchers make proposals, dispute and refine them and their resulting quality ensures at least some individualised adoption by other researchers) but there is often an observable gap in performance: Even though most modellers will endorse the value of data for modelling in principle most models are still non-empirical in practice (Angus and Hassani-Mahmooei 2015, Figure 9). The jury is still out on the best way to improve reviewer consistency, use the power of peer review to impose better standards (and thus resolve a collective action problem under academic competition[vi]) and so on but recognising and trying to address these issues is clearly important to the health of experimental methods in Agent-Based Modelling. Since running experiments in association with ABMs is already challenging, adding the problem of arbitrary reviewer standards makes the publication process even harder. This discourages scientists from following this path and therefore retards this kind of research generally. Again, here, useful resources (like the Psychological Science Accelerator, which facilitates greater experimental rigour by various means) were suggested in discussion as raw material for our own improvements to experiments in Agent-Based Modelling.

Another issue with newer methods such as Agent-Based Modelling is the path to legitimation before the wider scientific community. The need to integrate ABMs with experiments does not necessarily imply that the legitimation of the former is achieved by the latter. Experimental economists, for instance, may still argue that (in the investigation of behaviour and its implications for policy issues), experiments and data analysis alone suffice. They may rightly ask: What is the additional usefulness of an ABM? If an ABM always needs to be justified by an experiment and then validated by a statistical model of its output, then the method might not be essential at all. Orthodox economists skip the Agent-Based Modelling part: They build behavioural experiments, gather (rich) data, run econometric models and make predictions, without the need (at least as they see it) to build any computational representation. Of course, the usefulness of models lies in the premise that they may tell us something that experiments alone cannot (see Knudsen et al. 2019). But progress needs to be made in understanding (and perhaps reconciling) these divergent positions. The social simulation community therefore needs to be clearer about exactly what ABMs can contribute beyond the limitations of an experiment, especially when addressing audiences of non-modellers (Ballard et al. 2021). Not only is a model valuable when rigorously validated against data, but also whenever it makes sense of the data in ways that traditional methods cannot.

Where Now?

Researchers usually have more enthusiasm than they have time. In order to make things happen in an academic context it is not enough to have good ideas, people need to sign up and run with them. There are many things that stand a reasonable chance of improving the profile and practice of experiments in Agent-Based Modelling (regular sessions at SSC, systematic reviews, practical guidelines and evaluated case studies, discussion groups, books or journal special issues, training and funding applications that build networks and teams) but to a great extent, what happens will be decided by those who make it happen. The organisers of this round table (Nanda Wijermans and Edmund Chattoe-Brown) are very keen to support and coordinate further activity and this summary of discussions is the first step to promote that. We hope to hear from you.

References

Angus, Simon D. and Hassani-Mahmooei, Behrooz (2015) ‘“Anarchy” Reigns: A Quantitative Analysis of Agent-Based Modelling Publication Practices in JASSS, 2001-2012’, Journal of Artificial Societies and Social Simulation, 18(4), October, article 16, <http://jasss.soc.surrey.ac.uk/18/4/16.html>. doi:10.18564/jasss.2952

Ballard, Timothy, Palada, Hector, Griffin, Mark and Neal, Andrew (2021) ‘An Integrated Approach to Testing Dynamic, Multilevel Theory: Using Computational Models to Connect Theory, Model, and Data’, Organizational Research Methods, 24(2), April, pp. 251-284. doi: 10.1177/1094428119881209

Basole, Rahul C., Bodner, Douglas A. and Rouse, William B. (2013) ‘Healthcare Management Through Organizational Simulation’, Decision Support Systems, 55(2), May, pp. 552-563. doi:10.1016/j.dss.2012.10.012

Boero, Riccardo, Bravo, Giangiacomo, Castellani, Marco and Squazzoni, Flaminio (2010) ‘Why Bother with What Others Tell You? An Experimental Data-Driven Agent-Based Model’, Journal of Artificial Societies and Social Simulation, 13(3), June, article 6, <https://www.jasss.org/13/3/6.html>. doi:10.18564/jasss.1620

Bornstein, Brian H. (1999) ‘The Ecological Validity of Jury Simulations: Is the Jury Still Out?’ Law and Human Behavior, 23(1), February, pp. 75-91. doi:10.1023/A:1022326807441

Burton, Richard M. and Obel, Børge (2018) ‘The Science of Organizational Design: Fit Between Structure and Coordination’, Journal of Organization Design, 7(1), December, article 5. doi:10.1186/s41469-018-0029-2

Derbyshire, James (2020) ‘Answers to Questions on Uncertainty in Geography: Old Lessons and New Scenario Tools’, Environment and Planning A: Economy and Space, 52(4), June, pp. 710-727. doi:10.1177/0308518X19877885

Dinesen, Peter Thisted (2013) ‘Where You Come From or Where You Live? Examining the Cultural and Institutional Explanation of Generalized Trust Using Migration as a Natural Experiment’, European Sociological Review, 29(1), February, pp. 114-128. doi:10.1093/esr/jcr044

Doran, Jim (1998) ‘Simulating Collective Misbelief’, Journal of Artificial Societies and Social Simulation, 1(1), January, article 1, <https://www.jasss.org/1/1/3.html>.

Janssen, Marco A., Holahan, Robert, Lee, Allen and Ostrom, Elinor (2010) ‘Lab Experiments for the Study of Social-Ecological Systems’, Science, 328(5978), 30 April, pp. 613-617. doi:10.1126/science.1183532

Kagel, John H. and Roth, Alvin E. (eds.) (1995) The Handbook of Experimental Economics (Princeton, NJ: Princeton University Press).

Knudsen, Thorbjørn, Levinthal, Daniel A. and Puranam, Phanish (2019) ‘Editorial: A Model is a Model’, Strategy Science, 4(1), March, pp. 1-3. doi:10.1287/stsc.2019.0077

Levine, Gustav and Parkinson, Stanley (1994) Experimental Methods in Psychology (Hillsdale, NJ: Lawrence Erlbaum Associates).

Lindahl, Therese, Janssen, Marco A. and Schill, Caroline (2021) ‘Controlled Behavioural Experiments’, in Biggs, Reinette, de Vos, Alta, Preiser, Rika, Clements, Hayley, Maciejewski, Kristine and Schlüter, Maja (eds.) The Routledge Handbook of Research Methods for Social-Ecological Systems (London: Routledge), pp. 295-306. doi:10.4324/9781003021339-25

Ramanath, Ana Maria and Gilbert, Nigel (2004) ‘The Design of Participatory Agent-Based Social Simulations’, Journal of Artificial Societies and Social Simulation, 7(4), October, article 1, <https://www.jasss.org/7/4/1.html>.

Reypens, Charlotte and Levine, Sheen S. (2018) ‘Behavior in Behavioral Strategy: Capturing, Measuring, Analyzing’, in Behavioral Strategy in Perspective, Advances in Strategic Management Volume 39 (Bingley: Emerald Publishing), pp. 221-246. doi:10.1108/S0742-332220180000039016

Squazzoni, Flaminio and Casnici, Niccolò (2013) ‘Is Social Simulation a Social Science Outstation? A Bibliometric Analysis of the Impact of JASSS’, Journal of Artificial Societies and Social Simulation, 16(1), January, article 10, <http://jasss.soc.surrey.ac.uk/16/1/10.html>. doi:10.18564/jasss.2192

Taillandier, Patrick, Grignard, Arnaud, Marilleau, Nicolas, Philippon, Damien, Huynh, Quang-Nghi, Gaudou, Benoit and Drogoul, Alexis (2019) ‘Participatory Modeling and Simulation with the GAMA Platform’, Journal of Artificial Societies and Social Simulation, 22(2), March, article 3, <https://www.jasss.org/22/2/3.html>. doi:10.18564/jasss.3964

Tykhonov, Dmytro, Jonker, Catholijn, Meijer, Sebastiaan and Verwaart, Tim (2008) ‘Agent-Based Simulation of the Trust and Tracing Game for Supply Chains and Networks’, Journal of Artificial Societies and Social Simulation, 11(3), June, article 1, <https://www.jasss.org/11/3/1.html>.

Vuculescu, Oana (2017) ‘Searching Far Away from the Lamp-Post: An Agent-Based Model’, Strategic Organization, 15(2), May, pp. 242-263. doi:10.1177/1476127016669869

Zelditch, Morris Junior (2007) ‘Laboratory Experiments in Sociology’, in Webster, Murray Junior and Sell, Jane (eds.) Laboratory Experiments in the Social Sciences (New York, NY: Elsevier), pp. 183-197.

Notes

[i] This event was organised (and the resulting article was written) as part of “Towards Realistic Computational Models of Social Influence Dynamics” a project funded through ESRC (ES/S015159/1) by ORA Round 5 and involving Bruce Edmonds (PI) and Edmund Chattoe-Brown (CoI). More about SSC2021 (Social Simulation Conference 2021) can be found at https://ssc2021.uek.krakow.pl

[ii] This issue is actually very challenging for social science more generally. When considering interventions in social systems, knowing and acting might be so deeply intertwined (Derbyshire 2020) that interventions may modify the same behaviours that an experiment is aiming to understand.

[iii] In addition, experiments often require institutional ethics approval (but so do interviews, gaming activities and others sort of empirical research of course), something with which non-empirical Agent-Based Modellers may have little experience.

[iv] Chattoe-Brown had interesting personal experience of this. He took part in a simple team gaming exercise about running a computer firm. The team quickly worked out that the game assumed an infinite return to advertising (so you could have a computer magazine consisting entirely of adverts) independent of the actual quality of the product. They thus simultaneously performed very well in the game from the perspective of an external observer but remained deeply sceptical that this was a good lesson to impart about running an actual firm. But since the coordinators never asked the team members for their subjective view, they may have assumed that the simulation was also a success in its didactic mission.

[v] We should also not assume it is best to set our own standards from scratch. It may be valuable to attempt integration with existing approaches, like qualitative validity (https://conjointly.com/kb/qualitative-validity/) particularly when these are already attempting to be multidisciplinary and/or to bridge the gap between, for example, qualitative and quantitative data.

[vi] Although journals also face such a collective action problem at a different level. If they are too exacting relative to their status and existing practice, researchers will simply publish elsewhere.

Dino Carpentras, Edmund Chattoe-Brown, Bruce Edmonds, Cesar García-Diaz, Christian Kammler, Anna Pagani and Nanda Wijermans (2020) Where Now For Experiments In Agent-Based Modelling? Report of a Round Table as Part of SSC2021. Review of Artificial Societies and Social Simulation, 2nd Novermber 2021. https://rofasss.org/2021/11/02/round-table-ssc2021-experiments/